Visual, Spatial, Geometric-Preserved Place Recognition for Cross-View and Cross-Modal Collaborative Perception

Abstract

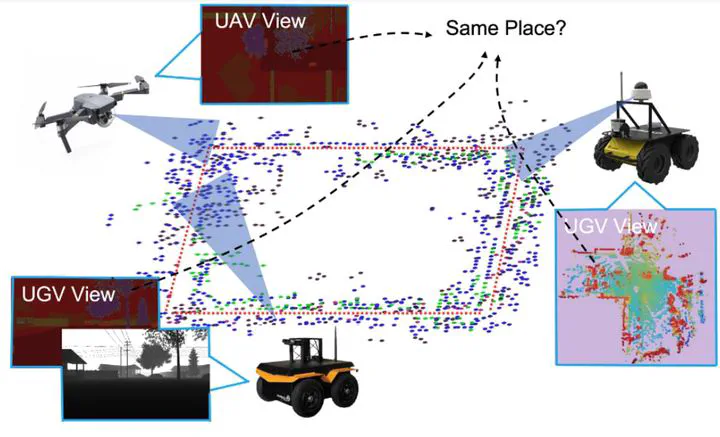

Place recognition plays an important role in multirobot collaborative perception, such as aerial-ground search and rescue, in order to identify the same place they have visited. Recently, approaches based on semantics showed the promising performance to address cross-view and cross-modal challenges in place recognition, which can be further categorized as graphbased and geometric-based methods. However, both methods have shortcomings, including ignoring geometric cues and affecting by large non-overlapped regions between observations. In this paper, we introduce a novel approach that integrates semantic graph matching and distance fields (DF) matching for cross-view and cross-modal place recognition. Our method uses a graph representation to encode visual-spatial cues of semantics and uses a set of class-wise DFs to encode geometric cues of a scene. Then, we formulate place recognition as a two-step matching problem. We first perform semantic graph matching to identify the correspondence of semantic objects. Then, we estimate the overlapped regions based on the identified correspondences and further align these regions to compute their geometricbased DF similarity. Finally, we integrate graph-based similarity and geometry-based DF similarity to match places. We evaluate our approach over two public benchmark datasets, including KITTI and AirSim. Compared with the previous methods, our approach achieves around 10% improvement in ground-ground place recognition in KITTI and 35% improvement in aerialground place recognition in AirSim.

Type

Publication

2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)